Vision Hack 2024

My notes from Vision Hack, the first global hackathon for Apple Vision Pro.

Vision Hack was the finale to my busy summer of visionOS development. It was something I was looking forward to all summer as a chance to challenge myself. It was a lot of fun! I got a chance to talk with many people I’ve only known through text mediums like Threads and X. I also got to see tons of amazing ideas from the other developers who joined this hackathon. I plan to explore some of those other apps on Step Into Vision.

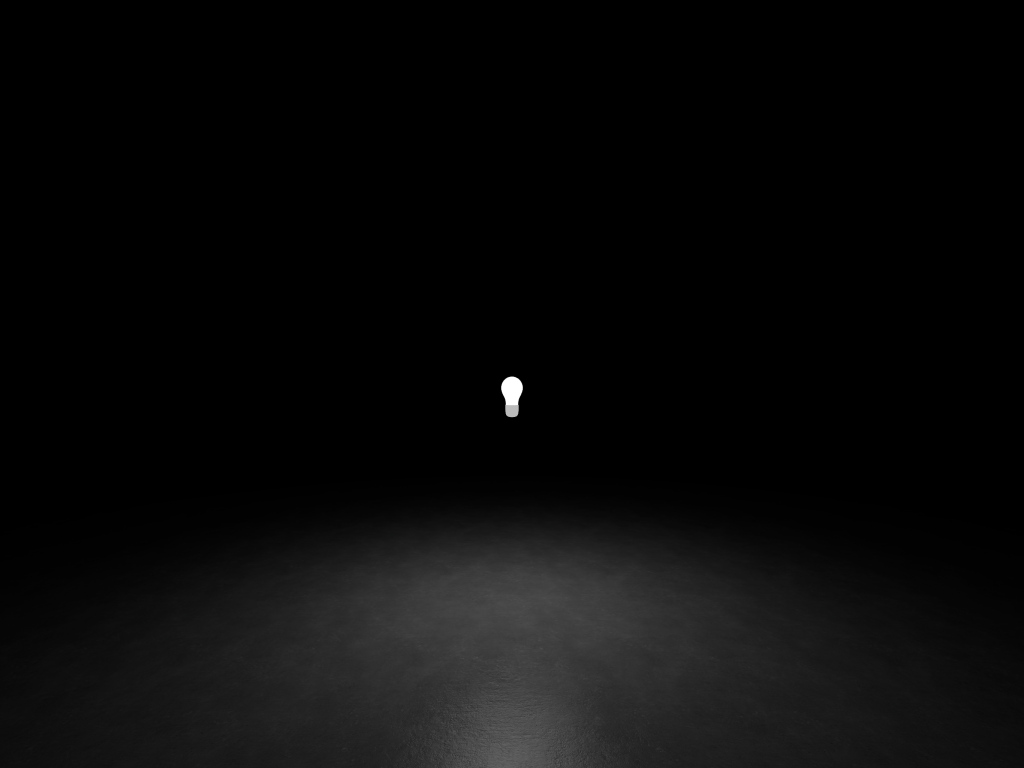

I showed up to Vision Hack with what I thought was a pretty bright idea. I was going to build a prototype app very similar to Project Graveyard, but instead of memorializing dead projects, this one would be for disorganizing ideas in the form of lightbulbs in space. The idea was to allow users to create a record with the name of an idea and perhaps some notes. Then the record would be transformed into a 3D model of a lightbulb in an immersive scene. Users could then move the lightbulbs around to place them anywhere they want. They would be able to scale the 3D model and adjust the brightness of the light to indicate the quality of the idea.

I thought it was a great idea and I still kind of do… But I quickly ran into limitations. Here are a few of the walls that I ran right in to.

Note: I could have researched these ideas before the hackathon started, but I avoided doing that. For me, finding out what is possible is a core part of development and I felt like I would be cheating if I did the research ahead of time.

- Create an immersive space with passthrough, then apply a dark surrounding effect to dim the real-world lights. Unfortunately, dynamic lighting in visionOS has no impact on passthrough. It only affects 3D models in a scene. Instead, I ended up making a very dark environment with a floor and a dome.

- RealityKit limits the number of dynamic lights to eight per scene. I don’t know about you, but I have way more than eight ideas!

- Instead of using dynamic lights to indicate the brightness of ideas, could I use emissive materials? No. While I can overload the emissive intensity and that will have a small impact on the light that the material emits, I could see no visual difference on the lightbulbs themselves. Basically, when looking at the 3D models, I could not distinguish between materials with an intensity of 1, 10, 100, or even 1000.

Dynamic lights wouldn’t work as I had hoped and emissive materials didn’t capture the distinction between ideas.

My idea came crashing down.

It was late Saturday afternoon and I had already used up half of the time for the hackathon and all I had were failures. I was brooding and feeling sorry for myself. I even thought about dropping out. Then I had another idea! What if I set aside the original plan and embraced failure instead? I kept reaching for idea after idea and they kept crashing down. Maybe I could do something with that concept.

I decided to drop the data-driven app and just focus on a short immersive experience where I could share my sense of optimistic and persistent failure.

Your ideas are limitless, but your resources are not.

The result is some sloppy code that gets the idea across pretty well. When you enter the scene, you see a single idea in front of you, represented as a lightbulb. When you reach out to it (or use the tap gesture) the light goes out and the bulb falls to the floor. Another light appears somewhere else in the scene and you repeat the process. There is no real ending to this. You can keep making lightbulbs until you use all the system resources and it crashes, although my guess is that would take many hours. Your ideas are limitless, but your resources are not.

To my delight and surprise, my submission A Bright Idea was awarded the prize Most Beautiful Design.

You can download the Xcode project here. Sorry for the sloppy and repetitive code. I didn’t have time to clean it up before the hackathon ended.